Advancing Quantitative Science

with Monte Carlo Simulation

Hok Chio (Mark) Lai

2019/05/16

Monte Carlo Methods

- 1930s-1940s: Nuclear physics

- Key figures:

- Stanislaw Ulam

- John von Neumann

- Nicholas Metropolis

- Manhattan project: hydrogen bomb

- Key figures:

- Naming: Casino in Monaco

Why Do We Do Statistics?

- To study some target quantities in the population

- Based on a limited sample

- How do we know that a statistics/statistical method gets us to a reasonable answer?

- Analytic reasoning

- Simulation

MC is one way to understand the properties of one or more statistical procedures

What is MC (in Statistics)?

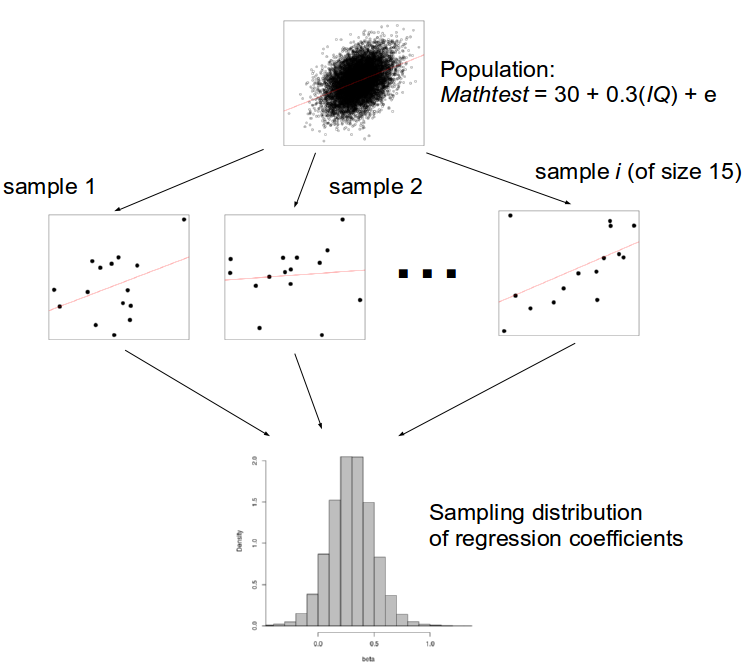

- Simulate the process of repeated random sampling

- E.g., repeatedly drawing sample of IQ scores of size 10 from a population

- Approximate sampling distributions

- Using pseudorandom samples

- Study properties of estimators

- regression coefficients, fit index

- compare multiple estimators or modeling approaches

What is it (cont'd)?

- Based on Carsey & Harden (2014):

- Simulations as experiments

- Whether there's a "treatment" effect (but not why)

- Simulations help develop intuition

- Shouldn't replace analytically and theoretical reasoning

- Simulations help evaluate substantive theories and empirical results

- Simulations as experiments

Sometimes analytic solution does not exist

Examples in the Literature

- Curran, West, & Finch (1996, Psych Methods) studied the performance of the χ2 test for nonnormal data in CFA

- Kim & Millsap (2014, MBR) studied the performance of the Bollen-Stine Bootstrapping method for evaluating SEM fit indices

- MacCallum, Widaman, Zhang, & Hong (1999, Psych Methods) studied sample size requirement for getting stable EFA results

- Maas & Hox (2005, Methodology) studied the sample size requirement for multilevel models

Generating Random Data in R

With MC, one simulates the process of generating the data with an assumed data generating model

- Model: including both functional form and distributional assumptions

rnorm(5, mean = 0, sd = 1)## [1] 0.8863733 1.6361050 -1.3694538 -1.1621330 1.1365392rnorm(5, mean = 0, sd = 1) # number changed## [1] 0.6607360 -0.7283291 0.5751322 1.4180376 -1.5442535Setting the Seed

- Most programs use algorithms to generate numbers that look like random

- pseudorandom

- Completely determined by the seed

- For replicability, ALWAYS explicitly set the seed in the beginning

set.seed(1)rnorm(5, mean = 0, sd = 1)## [1] -0.6264538 0.1836433 -0.8356286 1.5952808 0.3295078set.seed(1)rnorm(5, mean = 0, sd = 1) # same seed, same number## [1] -0.6264538 0.1836433 -0.8356286 1.5952808 0.3295078Generating Data From Univariate Distributions

rnorm(n, mean, sd) # Normal distribution (mean and SD)runif(n, min, max) # Uniform distribution (minimum and maximum)rchisq(n, df) # Chi-squared distribution (degrees of freedom)rbinom(n, size, prob) # Binomial distributionOther distributions include exponential, gamma, beta, t, F

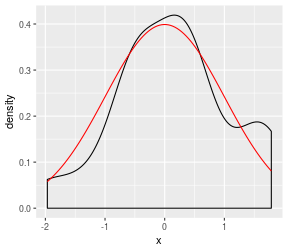

MC Approximation of N(0,1)

library(tidyverse)set.seed(123)nsim <- 20 # 20 samplessam <- rnorm(nsim) # default is mean = 0 and sd = 1ggplot(tibble(x = sam), aes(x = x)) + geom_density(bw = "SJ") + stat_function(fun = dnorm, col = "red") # overlay normal curve in red

Exercise

Try increasing nsim to 100, then 1,000

Exercise

Parameter vs Estimator

- Estimator/statistic: T(X), or simply T

- How good does it estimate the population parameter, θ?

- Examples:

- T=¯X estimates θ=μ

- T=∑i(Xi−¯X)2N−1 estimates θ=σ2

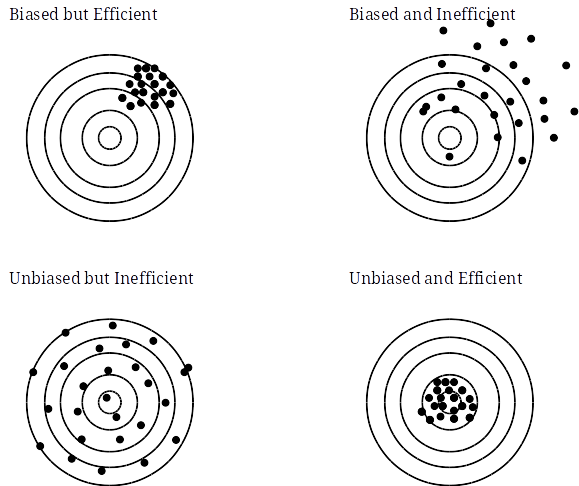

Properties of Estimators

- Bias

- Consistency

- Efficiency

- Robustness

What is a Good Estimator?

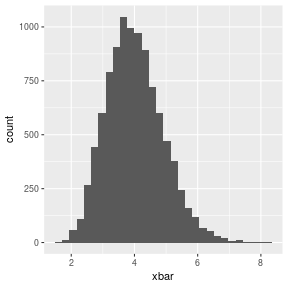

Sampling Distribution

- What is it?

Example I

Simulating Means and Medians

When to use MC?

- When it's difficult to analytically derive the sampling distribution

- E.g., indirect effect, fit-indexes; Cohen's d, SEs of estimators

- When required assumptions are violated

- E.g., normality, large sample

- Model is misspecified

- Used to check robustness of the estimator

A Simulation Study is an Experiment

| Experiment | Simulation |

|---|---|

| Independent variables | Design factors |

| Experimental conditions | Simulation conditions |

| Controlled variables | Other parameters |

| Procedure/Manipulation | Data generating model |

| Dependent variables | Evaluation criteria |

| Substantive theory | Statistical theory |

| Participants | Replications |

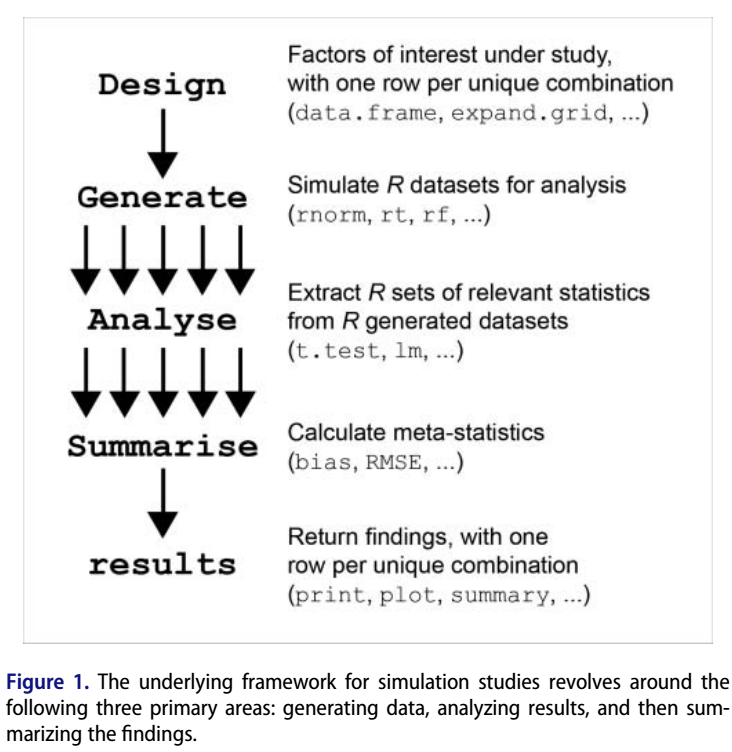

(Sigal and Chalmers, 2016, Figure 1, p. 141)

Design

Like experimental designs, conditions should be carefully chosen

- What to manipulate? Sample size? Effect size? Why?

- Based on statistical theory and reasoning

- E.g., Gauss-Markov theorem: regression coefficients are unbiased with violations of distributional assumptions

- What levels? Why?

- Needs to be realistic for empirical research

- Maybe based on previous systematic reviews,

- Or a small review of your own

Design (cont'd)

Full Factorial designs are most commonly used

Other alternatives include fractional factorial, random levels, etc

- See Skrondal (2000) for why they should be used more often

Generate

- Starts with a statistical data generating model

- E.g., Yi=β0+β1Xi+ei,eii.i.d.∼N(0,σ2)

- Systematic (deterministic) component: Xi

- Random (stochastic) component: ei

- Constants (parameters): β0, β1

- Yi completely determined by Xi,ei,β0,β1

- E.g., Yi=β0+β1Xi+ei,eii.i.d.∼N(0,σ2)

Model-Based Simulation

Analyze

Analyze the simulated data using one or more analytic approaches

- Misspecification: study the impact when analytic model omits important aspects of data generating model

- E.g., ignoring clustering

- Comparison of approaches

- E.g., Maximum likelihood vs. multiple imputation for missing data handling

Summarize (Evaluation Criteria)

- ¯^θ = ∑Ri=1^θi/R

- ^SD(^θ) = √∑Ri=1(θi−¯^θ)2R

For evaluating estimators:

- Bias

- Raw: ¯^θ−θ

- Relative: Bias / θ

- Standardized: Bias / ^SD(^θ)

- Relative efficiency (only for unbiased estimators)

- RE(^θ,~θ) = ^Var(~θ)^Var(^θ)

Evaluation Criteria (cont'd)

For uncertainty estimators

- SE bias

- Raw: ¯¯¯¯¯¯¯¯¯¯¯¯¯¯SE(^θ)−^SD(^θ)

- Relative: SE bias / ^SD(^θ)

Combining bias and efficiency

- Mean squared error (MSE): ∑Ri=1(θi−θ)2R

- Also = Bias2+^Var(^θ)

- Root MSE (RMSE) = √MSE

- Mainly to compare 2+ estimators

Evaluation Criteria (cont'd)

For statistical inferences:

- Power/Empirical Type I error rates

- % with p<α (usually α = .05)

- Coverage of C% CI (e.g., C = 95%)

- % where the sample CI contains θ

| Criterion | Cutoff | Citation |

|---|---|---|

| Bias | . | . |

| Relative bias | ≤5% | Hoogland and Boomsma (1998) |

| Standardized bias | ≤.40 | Collins, Schafer, and Kam (2001) |

| SE bias | . | . |

| Relative SE bias | ≤10% | Hoogland and Boomsma (1998) |

| MSE | . | . |

| RMSE | . | . |

| Empirical Type I error (α = .05) | 2.5% - 7.5% | Bradley (1978) |

| Power | . | . |

| 95% CI Coverage | 91%-98% | Muthén and Muthén (2002) |

Results

Just like you're analyzing real data

- Plots, figures

- ANOVA, regression

- E.g., 3 (sample size) × 4 (parameter values) 2 (models) design: 2 between factors and 1 within factor

Example II

Simulation Example on Structural Equation Modeling

Number of Replications

Should be justified rather than relying on rule of thumbs

Why Does MC Work?

- Law of large number

- ∑Ri=1Ti/Rp→θ

- When R is large,

- the empirical distribution ^F(t) converges to the true sampling distribution F(t).

Number of Replications (cont'd)

How Good is the Approximation

- Monte Carlo (MC) Error

- Like standard error (SE) for a point estimate

- For expectations (e.g., bias)

- MC Error = ^SD(^θ)/√R

E.g., if one wants the MC error to be ≤2.5% of the sampling variability, R needs to be 1 / .0252 = 1,600

Number of Replications (cont'd)

For power (also Type I error) and CI coverage,

- MC Error = √p(1−p)R

E.g., with R = 250, and empirical Type I error = 5%,

sqrt((.05 * (1 - .05)) / 250)## [1] 0.01378405So R should be increase for more precise estimates

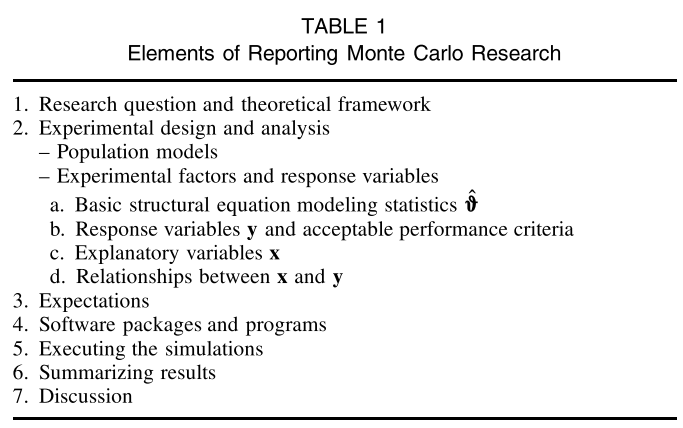

Reporting MC Results

(Boomsma, 2013, Table 1, p. 521)

See Boomsma (2013), Table 2, p. 526 for a checklist

Efficiency tips

- Things that don't change should be outside of a loop

- Initialize place holders when using for-loops

- Vectorize

- Strip out unnecessary computations

- Parallel computing (using the

futurepackage)

Other topics not covered

- Error handling

- Assessing convergence

- Debugging

- Interfacing with other software (e.g., Mplus, LISREL, HLM)

Further Readings

Carsey and Harden (2014) for a gentle introduction

Chalmers (2019) and Sigal and Chalmers (2016) for using the R

package SimDesign

Harwell, Kohli, and Peralta-Torres (2018) for a review of design and reporting practices

Skrondal (2000), Serlin (2000), and Bandalos and Leite (2013) for additional topics

Thanks!

References

Bandalos, D. L. and W. Leite (2013). "Use of Monte Carlo studies in structural equation modeling research". In: Structural equation modeling. A second course. Ed. by G. R. Hancock and R. O. Mueller. 2nd ed. Charlotte, NC: Information Age, pp. 625-666.

Boomsma, A. (2013). "Reporting Monte Carlo studies in structural equation modeling". In: Structural Equation Modeling. A Multidisciplinary Journal 20, pp. 518-540. DOI: 10.1080/10705511.2013.797839.

Bradley, J. V. (1978). "Robustness?" In: British Journal of Mathematical and Statistical Psychology 31, pp. 144-152. DOI: 10.1111/j.2044-8317.1978.tb00581.x.

Carsey, T. M. and J. J. Harden (2014). Monte Carlo Simulation and resampling. Methods for social science. Thousand Oaks, CA: Sage.

Chalmers, P. (2019). SimDesign: Structure for Organizing Monte Carlo Simulation Designs. R package version 1.13. URL: https://CRAN.R-project.org/package=SimDesign.

References (cont'd)

Collins, L. M, J. L. Schafer, and C. Kam (2001). "A comparison of inclusive and restrictive strategies in modern missing data procedures". In: Psychological Methods 6, pp. 330-351. DOI: 10.1037//1082-989X.6.4.330.

Harwell, M, N. Kohli, and Y. Peralta-Torres (2018). "A survey of reporting practices of computer simulation studies in statistical research". In: The American Statistician 72, pp. 321-327. ISSN: 0003-1305. DOI: 10.1080/00031305.2017.1342692.

Hoogland, J. J. and A. Boomsma (1998). "Robustness studies in covariance structure modeling". In: Sociological Methods & Research 26, pp. 329-367. DOI: 10.1177/0049124198026003003.

Muthén, L. K. and B. O. Muthén (2002). "How to use a Monte Carlo study to decide on sample size and determine power". In: Structural Equation Modeling 9, pp. 599-620. DOI: 10.1207/S15328007SEM0904_8.

Serlin, R. C. (2000). "Testing for robustness in Monte Carlo studies". In: Psychological Methods 5, pp. 230-240. DOI: 10.1037//1082-989X.5.2.230.

References (cont'd)

Sigal, M. J. and R. P. Chalmers (2016). "Play it again: Teaching statistics with Monte Carlo simulation". In: Journal of Statistics Education 24.3, pp. 136-156. ISSN: 1069-1898. DOI: 10.1080/10691898.2016.1246953. URL: https://doi.org/10.1080/10691898.2016.1246953 https://www.tandfonline.com/doi/full/10.1080/10691898.2016.1246953.

Skrondal, A. (2000). "Design and analysis of Monte Carlo experiments". In: Multivariate Behavioral Research 35, pp. 137-167. DOI: 10.1207/S15327906MBR3502_1.